OpenAI Java SDK——chatgpt-java

简介

chatgpt-java是一个OpenAI的Java版SDK,支持开箱即用。目前以支持官网全部Api。支持最新版本GPT-3.5-Turbo模型以及whisper-1模型。增加chat聊天对话以及语音文件转文字,语音翻译。

开源地址:https://github.com/Grt1228/chatgpt-java

快速开始

导入pom依赖

<dependency>

<groupId>com.unfbx</groupId>

<artifactId>chatgpt-java</artifactId>

<version>1.0.3</version>

</dependency>

package com.unfbx.eventTest.test;

import com.unfbx.chatgpt.OpenAiClient;

import com.unfbx.chatgpt.entity.completions.CompletionResponse;

import java.util.Arrays;

public class TestB {

public static void main(String[] args) {

//代理可以为null

Proxy proxy = new Proxy(Proxy.Type.HTTP, new InetSocketAddress("192.168.1.111", 7890));

OpenAiClient openAiClient = OpenAiClient.builder()

.apiKey("sk-**************")

.proxy(proxy)

.build();

//简单模型

//CompletionResponse completions = //openAiClient.completions("我想申请转专业,从计算机专业转到会计学专业,帮我完成一份两百字左右的申请书");

//最新GPT-3.5-Turbo模型

Message message = Message.builder().role(Message.Role.USER).content("你好啊我的伙伴!").build();

ChatCompletion chatCompletion = ChatCompletion.builder().messages(Arrays.asList(message)).build();

ChatCompletionResponse chatCompletionResponse = openAiClient.chatCompletion(chatCompletion);

chatCompletionResponse.getChoices().forEach(e -> {

System.out.println(e.getMessage());

});

}

}

支持流式输出

官方对于解决请求缓慢的情况推荐使用流式输出模式。

主要是基于SSE 实现的(可以百度下这个技术)。也是最近在了解到SSE。OpenAI官网在接受Completions接口的时候,有提到过这个技术。 Completion对象本身有一个stream属性,当stream为true时候Api的Response返回就会变成Http长链接。 具体可以看下文档:https://platform.openai.com/docs/api-reference/completions/create

package com.unfbx.chatgpt;

********************

/**

* @author https:www.unfbx.com

* 2023-02-28

*/

public class OpenAiStreamClientTest {

private OpenAiStreamClient client;

@Before

public void before() {

//创建流式输出客户端

Proxy proxy = new Proxy(Proxy.Type.HTTP, new InetSocketAddress("192.168.1.111", 7890));

client = OpenAiStreamClient.builder()

.connectTimeout(50)

.readTimeout(50)

.writeTimeout(50)

.apiKey("sk-******************************")

.proxy(proxy)

.build();

}

//GPT-3.5-Turbo模型

@Test

public void chatCompletions() {

ConsoleEventSourceListener eventSourceListener = new ConsoleEventSourceListener();

Message message = Message.builder().role(Message.Role.USER).content("你好啊我的伙伴!").build();

ChatCompletion chatCompletion = ChatCompletion.builder().messages(Arrays.asList(message)).build();

client.streamChatCompletion(chatCompletion, eventSourceListener);

CountDownLatch countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

//常规对话模型

@Test

public void completions() {

ConsoleEventSourceListener eventSourceListener = new ConsoleEventSourceListener();

Completion q = Completion.builder()

.prompt("我想申请转专业,从计算机专业转到会计学专业,帮我完成一份两百字左右的申请书")

.stream(true)

.build();

client.streamCompletions(q, eventSourceListener);

CountDownLatch countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

输出的是sse流式数据:

22:51:23.620 [OkHttp - OpenAI建立sse连接...

22:51:23.623 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"role":"assistant"},"index":0,"finish_reason":null}]}

22:51:23.625 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"你"},"index":0,"finish_reason":null}]}

22:51:23.636 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"好"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"!"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"有"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"什"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"么"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"我"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"可以"},"index":0,"finish_reason":null}]}

22:51:23.911 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"帮"},"index":0,"finish_reason":null}]}

22:51:23.912 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"助"},"index":0,"finish_reason":null}]}

22:51:23.934 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"你"},"index":0,"finish_reason":null}]}

22:51:24.203 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"的"},"index":0,"finish_reason":null}]}

22:51:24.203 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"吗"},"index":0,"finish_reason":null}]}

22:51:24.203 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"?"},"index":0,"finish_reason":null}]}

22:51:24.276 [OkHttp - OpenAI返回数据:{****省略无效数据******"model":"gpt-3.5-turbo-0301","choices":[{"delta":{},"index":0,"finish_reason":"stop"}]}

22:51:24.276 [OkHttp - OpenAI返回数据:[DONE]

22:51:24.277 [OkHttp - OpenAI返回数据结束了

22:51:24.277 [OkHttp - OpenAI关闭sse连接...

流式输出如何集成Spring Boot实现 api接口?

可以参考项目:https://github.com/Grt1228/chatgpt-steam-output

实现自定义的EventSourceListener,例如:OpenAIEventSourceListener并持有一个SseEmitter,通过SseEmitter进行数据的通信

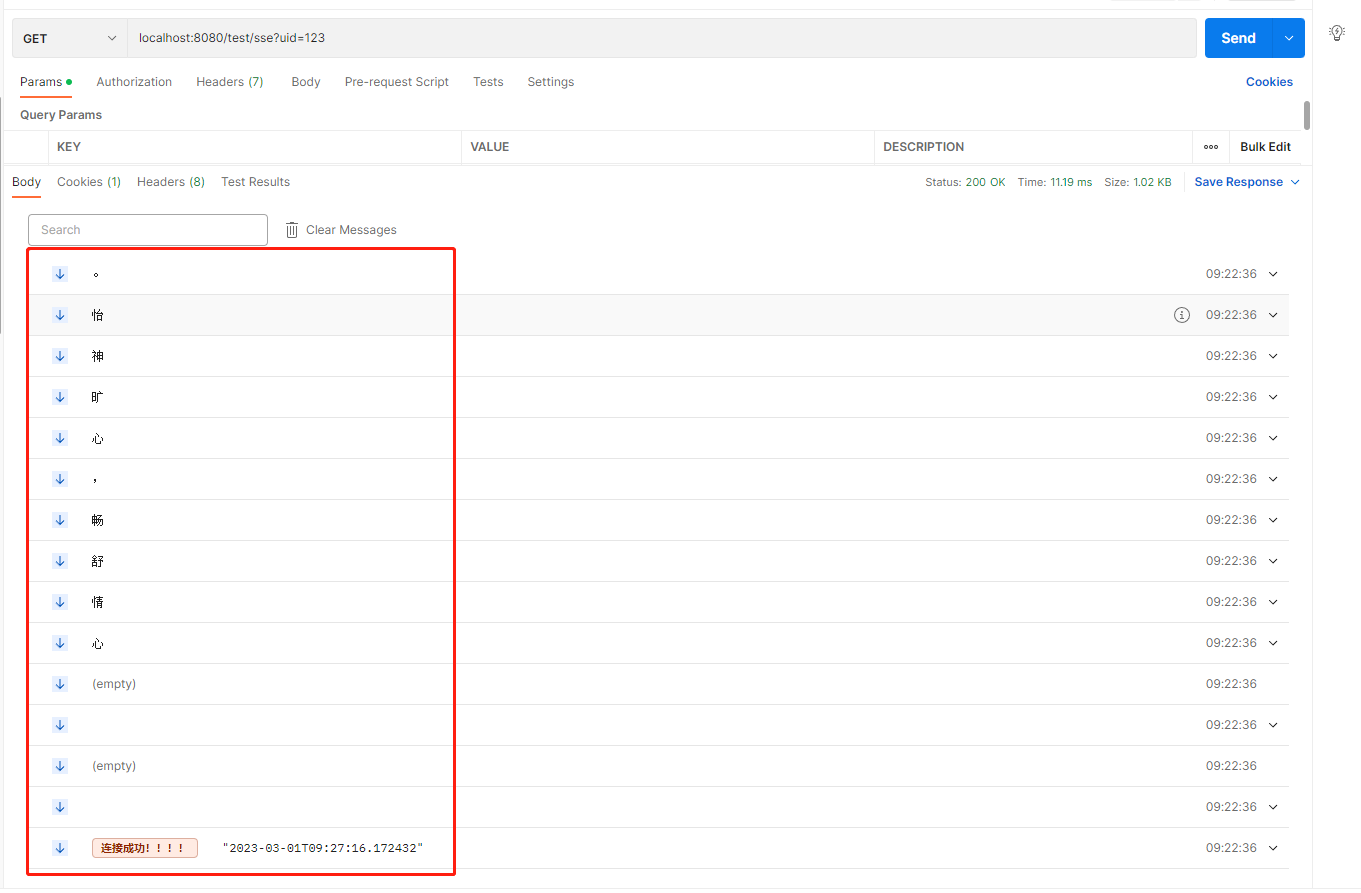

postman测试

发送请求:Get http://localhost:8080/test/sse?uid=123

看下response (需要新版本postman)

重点关注下header:Content-Type:text/event-stream

如果想结合前端显示自行百度sse前端相关实现

说明

支持最新版的语音转文字,语音翻译api请参考测试代码:OpenAiClientTest.java